Are we doing vulnerability management all wrong? Part 2: a better approach (maybe)

Update: part 2.1 is out in response to the NVD’s February 2024 service degradation announcement.

In part 1 of this article series, I opined on how vulnerability management programs tend to be heavily focused on reactive strategies with minimal focus on proactive ones. I proposed an opinionated proactive strategy that I dubbed Proactive Vulnerability Patch Management (PVPM). I even attempted to propose a risk-based prioritization framework to establish how an organization might start ramping up with PVPM, which I shamelessly dubbed Stakeholder-Specific Patching Prioritization (SSPP).

I can only imagine how giddy with excitement Gartner must be with these new ready-to-go cybersecurity marketing acronyms. But what really matters is what InfoSec practitioners make of these ideas, and make of these ideas they did! Below is a sampling of the feedback I got from my fellow InfoSec-ers that resonated with me the most:

Is it considered reactive to fix issues before they are exploited? How do you suppose this gets more proactive? Fixing issues before they arise? Yes. This is security by design. But that's a different domain and different department. Vulnerability management is supposed to identify issues and get them fixed before they are exploited.

One thing your blog post is missing that I'm excited about is secure-by-default. It's *so* much easier when developers are empowered to get the secure configuration from minute zero easily.

It's not either/or, it's both proactive and reactive, every day!

I agree with you that there is too much unused software and we should identify and remove as much of it. But unfortunately it gets much more nuanced. Did you know, while we keep talking about how 80-90 percent of your modern app code is open source, only 12% of the functions/lines-of-code within those libraries are actually used

But what do you expect an engineer to do? They can't simply remove the package because they are using 10 lines of code within it.

In part 2 of this article series, I’m going to do my best to expand on these points, as well as a few other top-of-mind ideas I have about what PVPM can look like in practice.

Let’s dive in!

Proactive vuln management === secure-by-design/default?

Strictly speaking, secure-by-design/default (SBD/D) strategies applied to vulnerability management are necessarily proactive (i.e. entire classes of vulnerabilities are avoided with strong secure SDLC practices, configuration management, etc.). However, there’s more to proactive vulnerability management that secure-by-design/default approaches don’t necessarily account for.

SBD/D approaches applied to vulnerability management can make it so that, on day 1 of a system being provisioned, it’s free of vulnerabilities. However, over time a system’s vulnerability state will change as new vulnerabilities in existing software or configurations are uncovered by researchers and attackers.

If one were to apply a reactive vulnerability management approach in this scenario of using secure-by-design/default systems on day 1, it might look like:

Scan running systems for vulnerabilities

Analyze and triage subset of vulnerabilities to remediate

For mutable systems: schedule & trigger patch deployments for running systems

For immutable systems: manually trigger re-deployment of vulnerable systems using latest vulnerability-free image/template

Conversely, a PVPM approach would look more like this:

For mutable systems: enable auto-patching setting/service/tool to check for and apply latest security updates on a regular basis with scheduled process/system restarts as needed

For immutable systems: schedule systems for automated re-deployment on a regular basis (weekly, monthly, etc.) using latest vulnerability-free image/template

Notably, PVPM doesn’t rely on those first two steps of vulnerability scanning → analysis/triage to drive remediation in already-provisioned systems. PVPM and SBD/D partially overlap with how they help with vulnerability management. But SBD/D doesn’t necessarily extend to post-day-1 of system provisioning.

You could argue that what I’ve described above is the definition of SBD/D, with PVPM merely being a feature of a secure-by-design system architecture. I’m not gonna argue with that because, well, it’s true!

I believe it’s beneficial, though, to be explicit about the distinction between the overarching umbrella category of SBD/D and the much more domain-specific and prescriptive concept of PVPM, such that we as a community are much more focused on figuring out practical ways to implement PVPM processes and tools vs. reiterating abstract InfoSec platitudes. Simply saying “well, that’s just SBD/D, which everyone should be doing anyway” doesn’t progress the conversation forward or drive the invention of more effective vulnerability management practices.

Alright, let’s take a break from acronyms for a moment and talk about proactive vs. reactive vulnerability management.

Reactive vs. proactive: why not both?

Indeed: why not?! While this may not have been clear in part 1 of this article series, I do believe that both reactive and proactive approaches are necessary ingredients for a strong vulnerability management program. Even if we do a perfect job at being proactive, it’s inevitable that we’ll need to keep being reactive, in large part due to 0-day vulnerabilities.

But let me reiterate my core point here: we currently invest far too many resources in reactive approaches at the expense of proactive ones. We have literally hit a point of diminishing returns with reactive vulnerability remediation, as demonstrated by Cyentia’s research on the subject:

The typical organization only fixes about 10% of its vulnerabilities in any given month. And that’s consistent regardless of how many assets are in the environment.

The imbalance between reactive vs. proactive vulnerability management

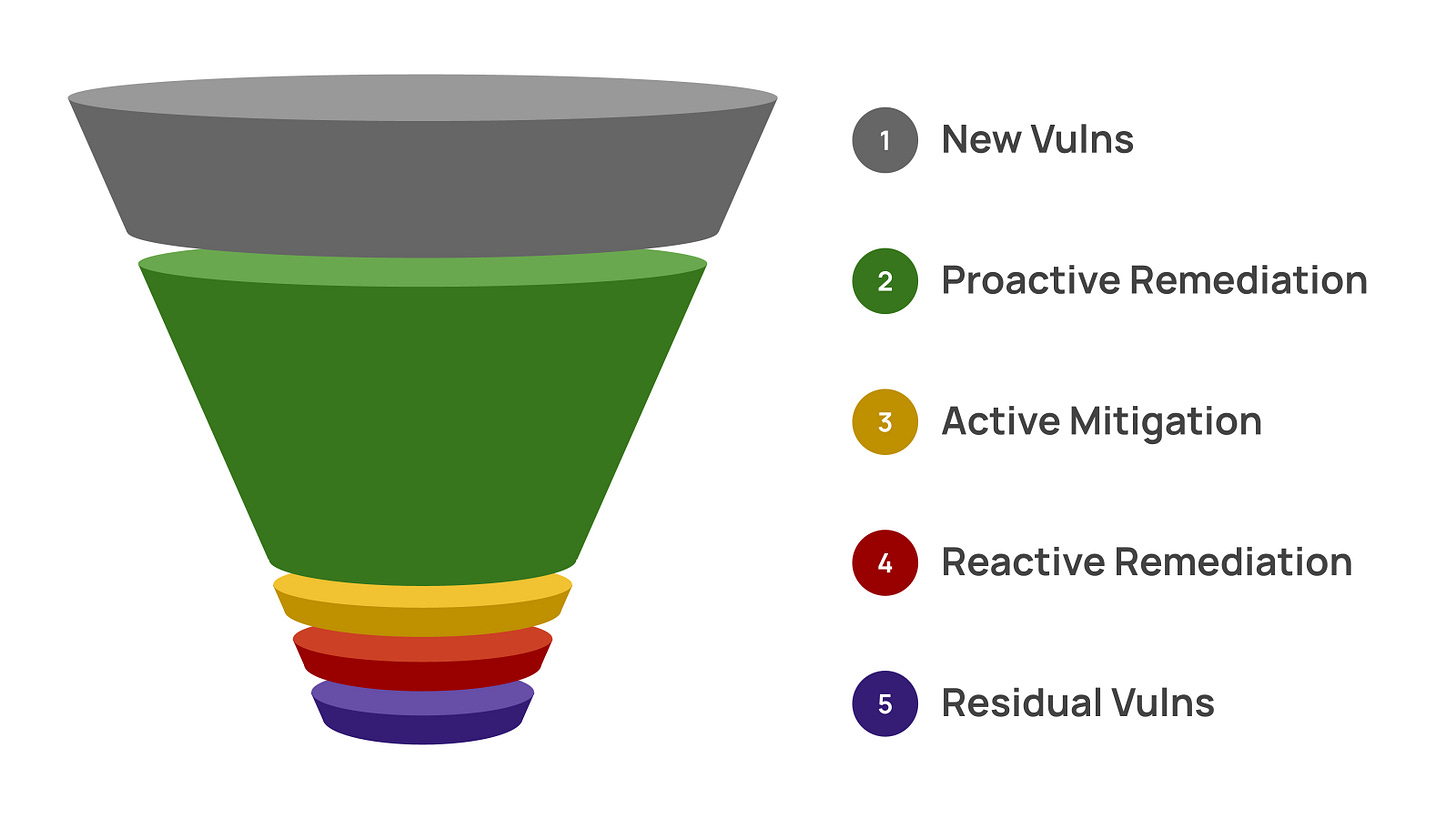

In my mind, there is a significant imbalance around how organizations apply reactive and proactive vulnerability management approaches. In the context of a “vulnerability management funnel” that would make any Gartner analyst swoon from pure envy, this imbalance looks something like this:

Even with reactive remediation capabilities maxed out, an average organization will only be able to fix ~10% of vulnerabilities in their environment per month. At best, an above average vulnerability management program will have automated patch management in place for their easy-to-patch mutable systems, like end user compute devices running Windows, Mac, and (occasionally) Linux. This might allow them to fix ~15% of vulnerabilities in their environment per month.

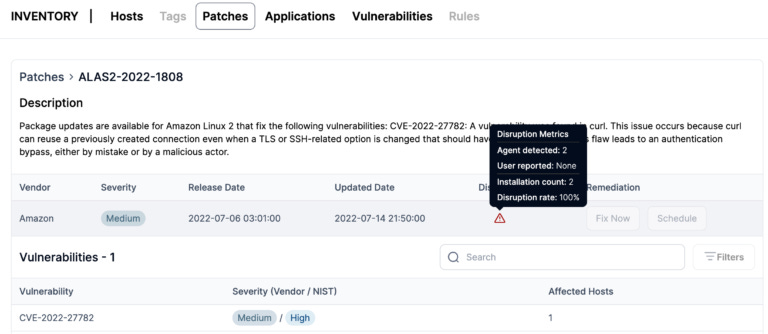

In these cases, though, it's likely that organizations are still engaging in reactive vulnerability remediation: patch management tools are configured to automatically deploy patches only when certain types of vulnerabilities with specific attributes are detected. Sometimes this reactive scan → triage → patch approach is fully handled by the patch management tool itself (which probably won't have the most comprehensive vulnerability database) or it’s triggered by a vulnerability scanner that integrates with said patch management tool (which introduces complexity-induced issues, like inconsistencies with normalizing and mapping data between tools, or each tools' vuln database not consisting of the same CVEs, etc).

Immutable systems are a whole different story. For all the benefits immutable systems provide, they are undoubtedly a vulnerability management footgun. Need to remediate a single vulnerability in an immutable infrastructure environment? Awesome! Just follow this super-fast-and-simple, definitely-not-cumbersome process that flows across three distinct systems (SCM → CI → CD):

Kick off base image creation process with vulnerability remediation technique applied

Update CD pipeline and runtime scaling configs to utilize new base image

Never forget: they built it, they own it! “They” being software developers (i.e. service owner teams). So, you’ll now make a heartfelt plea to them to re-deploy their service utilizing this new base image at their earliest convenience, ideally sooner than later so InfoSec will stop nagging you

Respond to a bunch of pages when stuff inevitably hits the fan

Rollback these changes and defer this pesky security work to Q5 20never.

Sorry, I lied: this is clearly the dictionary definition of cumbersome. The inherent difficulty of even reactively remediating vulnerabilities in these types of environments results in a lot of avoidance with having to engage in this process at all. Over time, immutable infrastructure ends up being riddled with a lot of lingering vulnerabilities that get patched occasionally or only when push comes to shove.

Don't get me wrong: it's 1000% possible to streamline this redeploy-to-patch process. Some organizations have automated this process to a large extent, even going as far as proactively redeploying services on a regular basis with the latest patched base image. But it requires a concerted and coordinated effort for organizations to get to this state of maturity and, as far as I know, few organizations have achieved this state.

Then there are self-hosted vendor software products. I’ve rarely seen or heard of any organization engaging in proactive remediation for these types of systems. Maybe that's part of the reason why there have been so many high-profile breaches caused by exploitation of these vuln-infested products. Think about how awful 2023 was for organizations that were running even just a few of the self-hosted vendor products that were ruthlessly exploited last year. I wouldn’t be surprised if a good number of organizations were using 5 or more of these products last year and had to deal with regular emergency patching exercises that burned out their teams:

It doesn't help that the update process for each vendors’ product vary wildly: some allow automated updates to be enabled, others don't; some require you to login to a support portal site and dig through 5 layers of menus to download an installer locally and push it to your devices with a proprietary GUI tool; others recognize you’re a living breathing human and would never subject you to this level of cruelty.

And it especially doesn't help that there aren't really any vendor-agnostic patch management tools that make it easy to centrally and automatically deploy patches across such a diverse set of vendor products. A typical organization might have one vendor for their file transfer system, one for their next gen firewalls, one for their VPN, one for their virtual desktop infrastructure, one for - ok, you get the idea.

Sounds like the perfect kind of problem for a security startup to tackle?!

But I digress…

Re-balancing our focus on proactive vulnerability management

Back to my original premise: what if we pivoted our focus toward maxing out our proactive vulnerability management capabilities first and foremost? Yes, we still need to do reactive vulnerability management. Yes, active mitigations are still a necessary part of our strategy. Yes, we’ll probably still have residual vulnerabilities in our environment.

However, if we do this right and we do it well, we should have far far fewer residual vulnerabilities lingering in our environment with a lot of time savings to boot. Behold: yet another Gartner-analyst-swoon-inducing vulnerability management funnel:

Imagine if we lived in this world instead of the one we currently occupy, a world where we only need to perform reactive vulnerability management in the context of zero day vulnerabilities and new classes of misconfigurations.

MOVEit-style mass exploitation events lasting months quickly become a thing of the past. When active exploitation of an N-day vulnerability starts to pick up, most organizations would have already proactively installed the latest security update without waiting to sift through vulnerability scan results before triggering their emergency vulnerability incident response process.

Would this state be very hard to achieve? Heck yes.

Would it be worth it? A thousand times yes.

Is it possible to do? I believe so, yes. Think about this: in the 1960s, using 1960s-era technology, we strapped a few humans inside a metal box, precisely launched them thousands of miles to the Moon, had them bop around on its surface - in hard vacuum - using “portable atmosphere” suits, and then safely brought them back to Earth by (slowly) crashing them into the ocean. In the 1960s. 60 years ago.

By comparison, this should be a walk in the park.

In fact, we’ve already started making progress in this direction as an industry. Proactive vulnerability management tools exist today.

Let’s take a look at some of them, shall we?

Proactive vulnerability management tools: some examples

Disclaimer: I’m about to discuss some open source and commercial tools, largely from a point of excitement about what I currently see as useful examples of tools that fit the PVPM model. I was not compensated in any way, shape, or form for discussing these tools in a positive light. Likewise, these are personal opinions, not professional endorsements, and as such you should use your judgment when exploring or utilizing these tools.

Containers

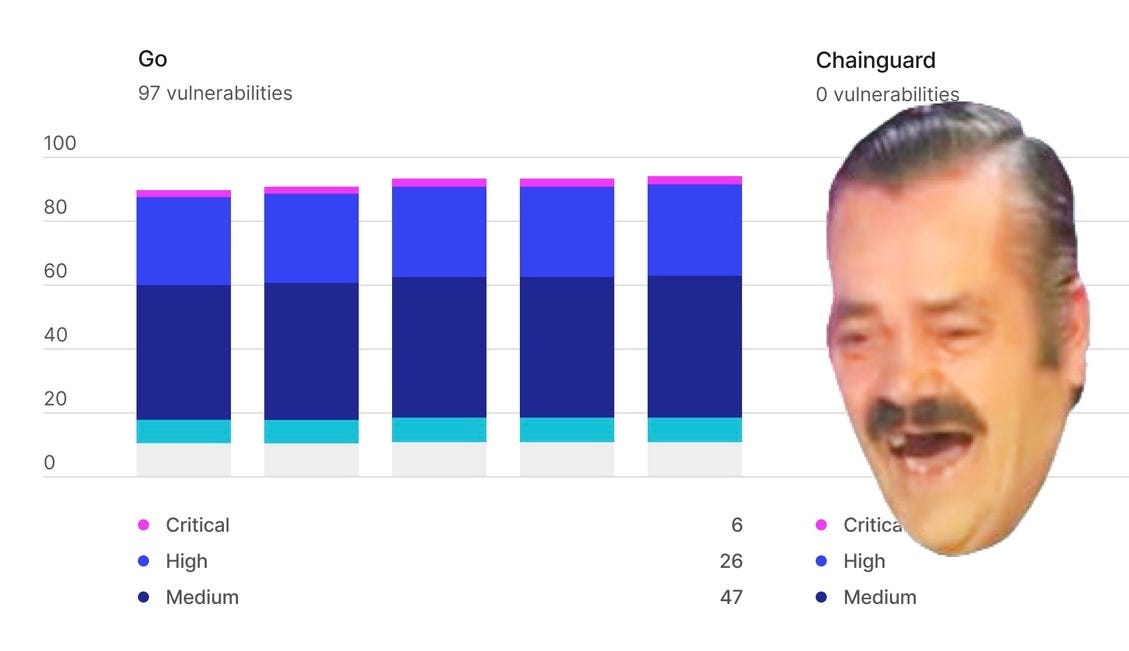

Chainguard: someone call Gartner and tell them it’s time to coin the term “Proactive Vulnerability Annihilation (PVA)” because that’s exactly the capability Chainguard provides as the de facto visionary leader in this totally-made-up-just-for-this-article product category (credit goes to Jordi Mon Companys for inspiring this name). Chainguard Images are the best example of Proactive Vulnerability Patch Management (PVPM) in practice: unused software components are stripped out or not added in the first place, and all known CVEs for included software components are patched before a container image is published.

In other words: Chainguard takes a highly disciplined and methodical approach to proactively yeeting all known CVEs into /dev/null each time they produce a new freely-available, zero CVE container image. It’s like vulnerability management magic!

(No, they didn’t pay me to write this (yes, they probably should have (lolol I’m just kidding, Dan!!(…or am I?))))

General purpose OSes

macOS

Patchomator & App Auto-Patch: both of these open source tools automatically install the latest updates for popular macOS apps without giving a hoot about what your vulnerability scanner has to say about detected CVEs. While these are easy to run locally, they are also designed to be deployed via Jamf other MDM tools. App Auto-Patch also has a nice SwiftDialog UI/UX that can be customized to be fully interactive for end users, partially interactive, or totally silent. They both use Installomator under the hood for consistent and secure automated installation of the latest version of hundreds software packages. Hooray for open source and the Mac Admins community!

Windows, macOS, Linux

Vicarius: they're a newer player in a space formerly dominated by BigFix and probably-currently being dominated by Automox. I hadn't heard of them before until someone mentioned them in response to part 1 of this article series (thanks, Michael!)

While Vicarius doesn’t necessarily focus on the “removed unused software” part of PVPM like Chainguard does (although I’m sure with some fiddling you could make their automation functionality do this), they seem to have a strong approach to proactively patching a lot of different software across these three OSes. At the time of this writing, they support patching 523 Windows apps (including common enterprise software like Splunk, presumably the Splunk agent), 646 macOS apps, and 2077 Linux apps. I suspect they're piggybacking off well-established package managers, but hey, good for them if that's the case! No need to reinvent the wheel here.

As an added benefit, they also have some active mitigation features called “Patchless Protection'' which especially helps with 0-day scenarios when a patch isn't yet available. It seems IPS-esque, as if it’s…extending traditional IPS functionality to cover specific…endpoint software…like some sort …I dunno…eXtended Endpoint IPS…an…X…E…IPS?

An XEIPS?!

We could pronounce it as “ZEE-ps”!!!

(God I hope someone from Gartner is reading this…)

trackd: even newer than Vicarius is trackd. They are the closest thing to a PVPM-centric tool I’ve ever come across: they recognize that the root cause of poor patching practices is fear of patches breaking things. Thus, their key selling point is crowdsourcing telemetry about patch stability to allow you to engage in what I like to call “Fearless Patch Management.” For that reason, a basic version of their product is free and will always be free - give it a shot!

I’m really excited about how trackd can help drive organizations toward fearless, proactive vulnerability patching. Definitely keep an eye on them!

Source code

Ok, here’s the thing about first-party and third-party code remediation, which every software developer who’s ever had to deal with it knows: it’s an absolute PITA. And while aspects of PVPM like systematically removing unused software makes sense in the context of third-party libraries, it doesn’t really apply to first-party code (unless you’re writing a bunch of vulnerable code that end users would never interact with but attackers could have a field day with, in which case you should probably be banned from using the internet until the end of time).

Likewise, trying to proactively auto-remediate vulnerabilities in third-party source code is a fool’s errand like none other: unless a fix path for a third-party library includes a security patch version, minor and major version updates tend to contain breaking changes that require time intensive analysis, code refactoring, and test suite updates to avoid.

So, it’s pretty safe to say that PVPM just isn’t applicable to the domain of source code vulnerability management.

…

……

………………

………………………………

………………

……

…

PSYCH!

PVPM is totally relevant to source code vulnerability management. There are at least two new products on the market that can help implement PVPM (insofar as it’s possible to do so) for code vulnerability management without it being a total PITA.

From what I can tell, these two products differentiate themselves from existing proactive code vulnerability management patterns and tools, such as:

Secure code libraries that make it easy to avoid entire classes of vulnerabilities (SQLi, XSS, XXE, etc,) by providing securely-defined classes, methods, functions, etc. to developers

SCM and CI integrations that block commits and fail build jobs when certain vulnerability conditions are met

Let’s take a look at how these tools uniquely contribute to a PVPM model for code!

Moderne (and OpenRewrite): Yes, existing software composition analysis (SCA) tools like Snyk and Dependabot can automatically open PRs to update your third-party libraries, and even static application security testing (SAST) tools like Semgrep have “auto-fix” recommendation engines that will use regex matching to make it easy to find-and-replace vulnerable first-party code with remediated code. However, these tools are by default reactive: first, you scan your code for known vulnerabilities - then you remediate them.

Moderne takes a different approach. First, it uses a novel code data model called Lossless Semantic Trees (LST) to perform highly accurate, format preserving, type aware, error tolerant code refactoring when remediating vulnerabilities. This is especially valuable when doing version migrations of runtime environments and frameworks (which is necessary sometimes to remediate vulnerabilities or add new security features), across multiple teams’ code (which is a huge pain-in-the-backside change to try to drive). This fits into the PVPM framework in my mind when you start to recognize that these major version migrations can now be done faster and with more confidence, making them easier to do proactively well before a runtime environment or framework end-of-life date comes about.

Because Moderne uses LSTs, it’s in a much better position to thoroughly and accurately understand how code is utilized throughout an application across multiple codebases. This presumably is what makes it possible for them to have a pretty accurate “remove unused imports” recipe, which fits in nicely with “remove unused software” stage of our PVPM framework.

There is at least one big drawback about Moderne currently: their vulnerability detections and insecure code remediation “recipes” aren’t nearly at parity with your typical SCA and SAST tool. Granted, Moderne is very new, so given enough time and intentional focus, they could certainly build out a rich library of recipes that make it easy to proactively auto-remediate vulnerabilities at scale in ways that existing SAST and SCA tools might struggle with doing.

But don’t take my word for it, either way! Test out Moderne yourself using their free community edition.

Seal Security: this company is basically a software security superhero. They are diving headfirst into other people’s open source codebases and very precisely patching (“sealing”) vulnerabilities in them, even across multiple older versions of a given codebase. Given how new of a company they are, their security patch version repository is pretty well built out, supporting multiple popular languages with a good number of patches for Node libraries especially.

Then they have their seal CLI tool that makes it easy to patch a vulnerable library on demand or on the fly. This makes it much more feasible to proactively patch third-party libraries, knowing that you’re only updating to a patch version where the only code change is a bespoke vulnerability fix, not a breaking change.

Seal Security is making it so that we can have our “third-party code vulnerability auto-remediation” cake and eat it too.

Thank you, Seal Security, for saving the world from insecure software, one patch at a time 🫡

Concluding thoughts

I hope this part 2 article of “Are we doing vulnerability management all wrong?” provided something of value for you. I’d love to get your feedback or perspective on what I’ve laid out here, especially if I misrepresented or misunderstood anything I’ve said!

Coming up next: part 3 where I cook up a hot take about risk-based vulnerability management and where I see it missing the mark. I may even offer up a universal vulnerability risk rating model that more precisely accounts for realistic risk attributes that more directly correlate with attacker behavior and motivations. Likewise, I’ll sprinkle in some thoughts on risk-based remediation SLAs.

Stay tuned!

Good stuff! Reactive for the 10% where it’s necessary (zero days and so forth); proactive for everything that can auto-fixed without introducing stability risk to prod systems, and balancing pain inflicted on workstation end users