Are we doing vulnerability management all wrong? Part 2.1: evolving beyond CVEs and the NVD

While working on part 3 of this series (title TBD), something strange happened in the world of vulnerability management: NIST’s NVD quietly posted a vague notice on their website about “delays in analysis efforts” due to time they’re spending establishing a “consortium to address challenges in the NVD program.” If you want to learn more about what is going on here, check out Chris Hughes’s really solid overview of the whole situation, including NVD outsiders’ research and observations that shine a light on the kinds of “challenges” the NVD is facing.

In this interim part 2.1 article, I’m going to explore an idea I started discussing in Dan Lorenc’s LinkedIn post on this subject: that the NVD’s recent service degradation might be a blessing in disguise, an opportunity for the InfoSec community to take a step back and seriously ask ourselves “is this really the best we can do with vulnerability management? What could or should ‘better’ or ‘best’ look like for vulnerability management?”

Let’s dive in to see what we can make of this!

Current vs. future state of our vulnerability management ecosystem

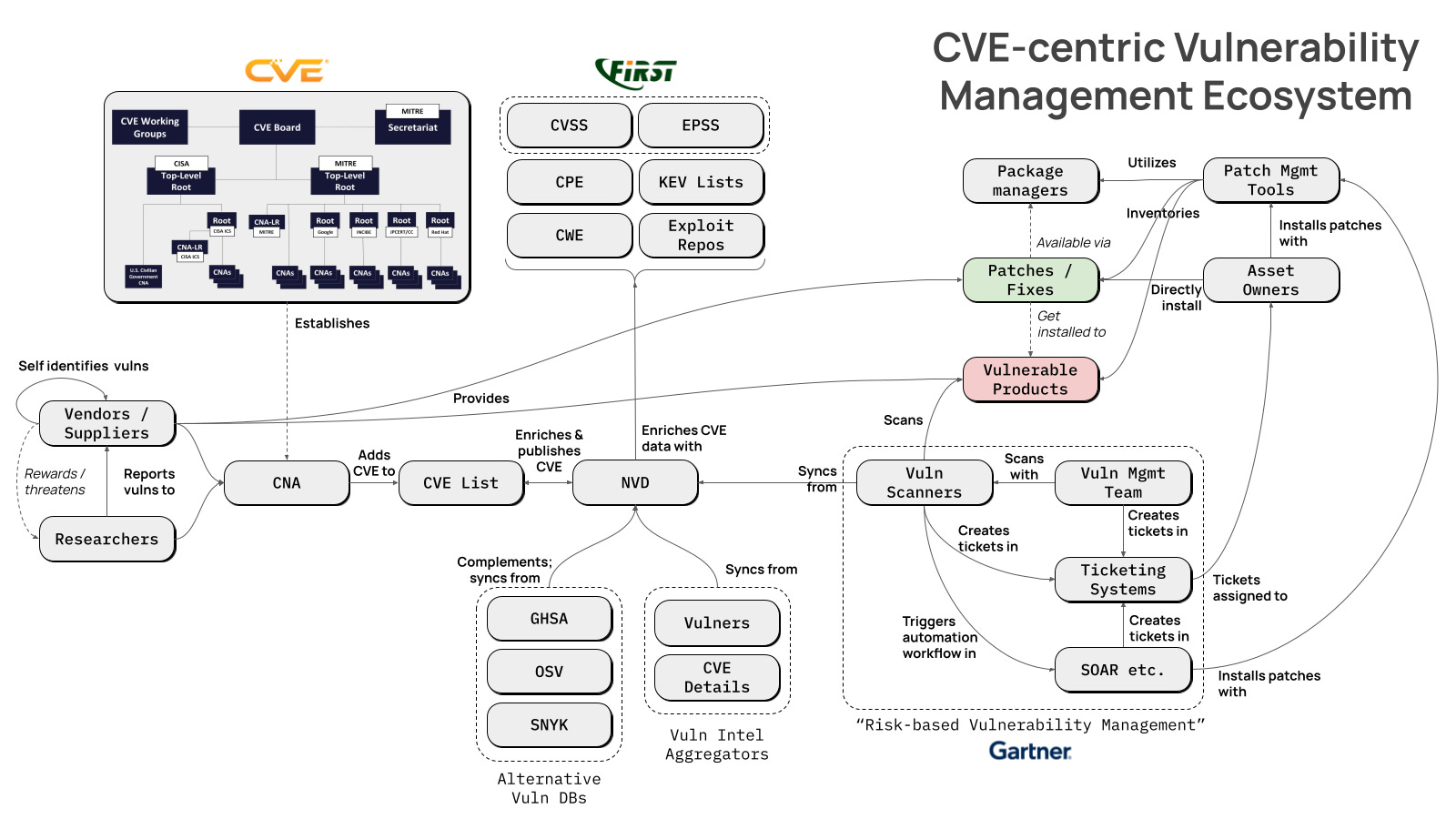

The world and practice of vulnerability management is incredibly, and almost exclusively, CVE centric. While alternative vulnerability databases exist, like GitHub Security Advisories (GHSA), Snyk’s SNYK vulnerability database, and the Open Source Vulnerabilities (OSV) project, they merely complement (and synchronize CVE data from) NIST’s NVD. All of our vulnerability management tools, “best” practices, frameworks, etc. are built on the foundation the NVD provides.

I’m a very visual thinker and find it really helpful to draw out really complex ideas and concepts to better demonstrate and capture what they’re all about. To that end, I took a stab at drawing out what I see as the key or notable ingredients that constitute our CVE-centric vulnerability management ecosystem.

Geez, I may as well have drawn out the digital equivalent of a rat’s nest, yeah? What an absolutely overcomplicated mess this is! Forget risk-based vulnerability management (RBVM) - with this many moving parts, we should call it “Rube Goldberg Vulnerability Management” (RGVM).

🥁

*insert laugh track*

We have the CVE Organization - now independent of, but still highly reliant on, MITRE - as well as FIRST and NIST’s NVD basically propping the entire thing up. We’ve got at least three, if not four, distinct types of tools that are used to drive remediation of vulnerabilities: vulnerability scanners, ticketing systems, SOAR or other security automation tools, and patch management tools.

I loathe this, if you couldn’t already tell. This is an absurdly complex and inefficient system for a practice of information security that strives to “find and fix vulnerabilities before attackers exploit them.” No wonder attackers are mopping the floor with us, week in and week out. We can’t get out of our own way!

So, what might it look like if we instead reimagined this to be a patch-centric vulnerability management ecosystem, one that strives to “keep software current with the latest security patches” as the primary means to fix vulnerabilities before attackers exploit them?

Look closely at this and think about it for a few minutes.

Why wouldn’t this work?

Why wouldn’t this work as well as our current CVE-centric model?

Try to envision asset owners leveraging a predictable, structured, and rigorous approach to proactively applying patches using tools like Vicarius (or even free tools like App Auto-Patch) for Windows, macOS, and Linux systems, or Seal Security for precise security patch updates for third-party libraries, or Moderne for high-confidence code refactoring workflows to speed up MTTR for third-party library and framework updates, or Chainguard for already-fully-patched-for-you container images.

MacAdmins has already brought the idea of a patch-centric data feed to life with their SOFA project.

Try to envision how rigorous patch testing and quality assurance practices (drawing inspiration from modern software engineering CI practices especially) could allow us to patch proactively without needing to pick and choose patches based on complex risk-based triage logic and statistical prediction models (as cool as those are!). I’m talking about practices like regression testing and canaries rooted in strong observability and “failed patch” alerting logic.

Additionally, there are newer tools like trackd that provide historical patch quality analysis that make it easier to understand which patches are going to cause problems that you should exclude from your proactive patching cycle until you’ve had time to test them more rigorously yourself. Rather than picking and choosing which patches to deploy, tools like trackd allow you to pick and choose which patches to exclude.

I recently started referring to this overarching approach to rigorous patch testing and QA practices as Fearless Patch ManagementTM (Gartner, you need to make this a thing!!!) because that’s what we should be talking about as the solution to the long-running and pervasive culture of “fear” about patches breaking things, with this fear being used as an excuse to only selectively patch vulnerabilities that defenders think attackers are going to exploit.

…

If this still doesn’t make sense as a better alternative to a CVE-centric vulnerability management ecosystem, what different ideas can you imagine that would allow organizations to much more efficiently, effectively, and confidently apply security patches on a continuous basis?

What would we need to do differently, or what tools would we need to build, or what new best practices would we need to invent, to make this the ecosystem that makes the vulnerability management world go round?

I’d love to get a discussion going around what a better future state of vulnerability management looks like that leans far more heavily into proactively fixing vulnerabilities - or installing security patches - and leans far away from reactively fixing only a tiny subset of vulnerabilities based on increasingly-complicated, nuanced, and presumptuous triage logic.

A quick side note about software supply chain vulnerability management concerns

What if the NVD actually did fizzle out or become obsolete due to concerns around the quality of their data and their ability to maintain it?

What would an NVD-less world of vulnerability databases look like? And how would this impact software supply chain security practices?

Well, I’ve got a picture for that!

Makes sense, right? In this context, SBOMs (and supporting data provided by VDR and VEX files) play an integral part in supporting a decentralized vulnerability database ecosystem.

This would be more complex for sure and would require more intentional cooperation and partnership between vulnerability database providers to ensure consistent and high-quality data. Some sort of data synchronization and federation model could make this work at scale.

It would remove our reliance on a single centralized highly-bureaucratic source for vulnerability data. But maybe it could work?

Concluding thoughts

I don’t doubt that NVD will bounce back thanks to a consortium being formed that will allow it to operate more effectively and keep pace with an ever-growing list of newly published CVEs. However, this latest industry issue is as good an opportunity as ever for our profession to take a step back and seriously try to figure out what a better approach to vulnerability management can and should look like.

The sooner we figure that out, the sooner we can bring it to life.

Let’s go!